With 79% of senior executives saying their company already deploys some form of AI, the C-Suite has collectively bet their businesses on the mass adoption of AI tools. Any why not when there’s an algorithm for everything from hiring to safety, service, and (so much!) more? The main reason is risk.

Senior leaders have a lot riding on the success of any AI project, yet any mistakes in implementation carry regulatory, financial, and reputational risk. And now that regulators have moved from guidance to enforcement, the risk that AI adoption is creating for enterprises demands accountability and ownership from executive leaders.

Adoption is Outpacing Risk Governance

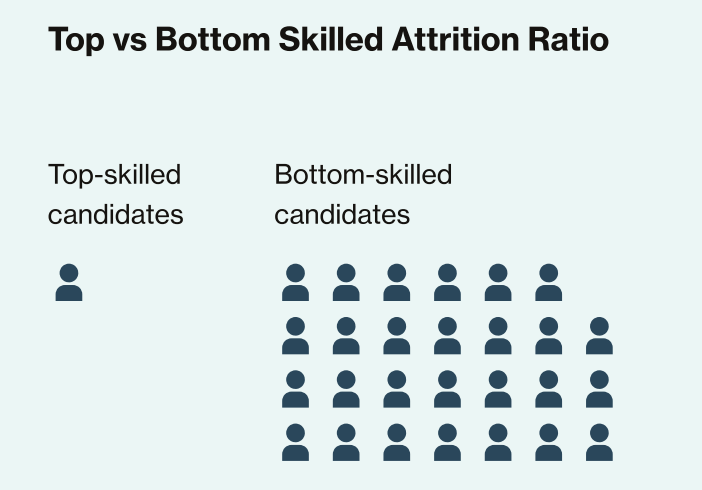

EY recently completed its Responsible AI Pulse Survey with executive leaders and reported a significant gap between how much risk they perceive and how much governance they have in place. Only a third of respondents said they had responsible controls in place for their AI models that are well past the pilot project.

The other finding was that C-suite executives are more relaxed about the risk AI adoption entails than everyone else. EY reported that the C-suite was only about half as concerned as the general population about the general risks of AI adoption, and in a professional setting, CEOs are showing greater concern for AI risks than their direct reports.

Insights and Strategies to Minimize Enterprise Risk

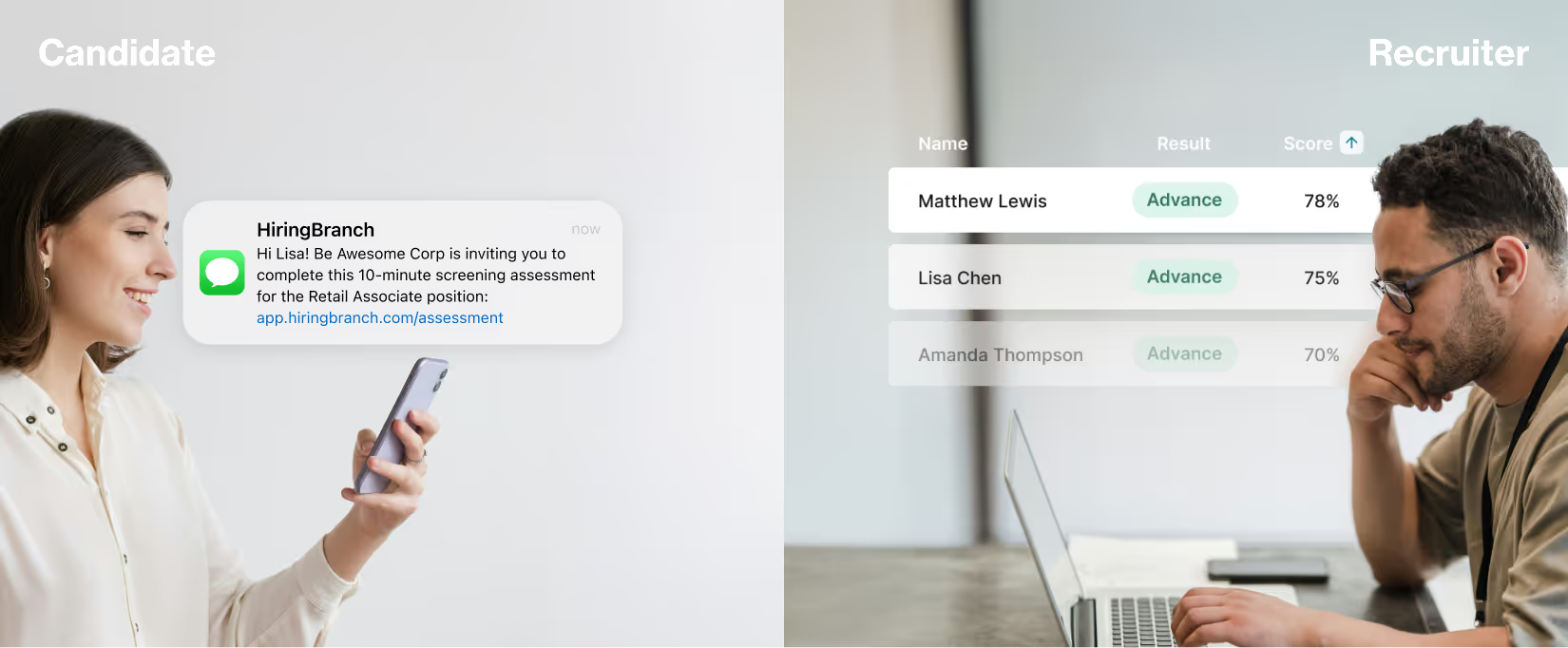

Senior leaders need to weigh potential gains with inherent risks when they are evaluating new AI vendors. Everything from data integrity and privacy to audit trails for things like hiring decisions. On top of that, enterprise leaders are met with inflated claims from vendors and even cases of AI washing, or AI wrappers at best.

Unless a company has real AI infrastructure, like machine learning, neural networks, NLP, image recognition, or generative AI, it may simply be using the term "AI" as a façade without substance. Consider that by this time last year, more than a dozen companies had already been flagged by U.S. and European regulators for making exaggerated or unsupported AI-related claims.

Enterprise leaders are encouraged to sift through the abundance of AI providers with scrutiny. Once a vendor shortlist is established, apply excessive due diligence. Understand the flow of data, ask for bias audits, talk about ongoing monitoring, and demand a pilot before the full rollout.

Here are a few more leadership strategies to help enterprise leaders unlock growth from AI responsibly:

- Consider creating an AI task force, committee, or at least name one accountable owner and give them teeth

- Operationalize explainability by requiring a one-page decision fact sheet for every high-risk system in question. Assets like event logs and incident playbooks are key to establishing a foundation for AI explainability. If leaders can’t understand it, regulators won’t either.

- Treat data like a regulated asset and minimize the flow of PII

Executive leaders are encouraged to oversee operations like data lineages in case of an audit or a situation where they have to defend the AI’s decision.

Become Familiar with Relevant Governance Baselines

When it comes to avoiding risks associated with AI adoption, leaders don’t need to reinvent the wheel. Multiple credible frameworks already exist to establish a baseline for AI evaluation and monitoring. Here are a few examples:

- NIST AI Risk Management Framework (RMF): Provides guidance for risk identification, measurement, and iterative mitigation across the AI lifecycle, paired with a public playbook that operationalizes controls. Established in January 2023.

- ISO/IEC 42001 (AI management systems): A certifiable standard introduced in December 2023 aimed at aligning policy, process, and continuous improvement for AI.

- OECD AI Principles: Promotes transparency, human oversight, robustness, and accountability for AI actors. Established in 2019 and updated in 2024, there are now over a thousand policymakers referencing these principles in at least 70 jurisdictions.

- EU AI Act: Enforces risk management, data governance, technical documentation, logging, accuracy/robustness, and human oversight across all high-risk AI systems, including any used in hiring.

- NYC Local Law 144 (AEDTs): Enforces annual AI audits for the use of automated hiring tools. Regulations include public posting of results and advance disclosure to candidates.

- Search for emerging local regulations: For example, the Colorado Artificial Intelligence Act asks high-risk AI affecting consequential decisions to manage risk and document impact.

Set aside time to review the key legal AI framework. Together, these set clear expectations for risk management, documentation, responsible oversight, and auditability.

If you operate globally, lean on a team of experts. There are varying comprehensive guidelines per jurisdiction to consider. For example, the Canadian federal Directive on Automated Decision-Making and the UK ICO guidance both emphasize documentation, impact assessment, and human oversight.

Advice: Balance Opportunities with Granular Oversight

Leaders have to separate all the AI hype from the actual output it can deliver on a per-organization basis, and even down to the KPI for a given department. Each instance of AI adoption has the potential to supercharge or sabotage a business. Leaders who can effectively operationalize AI will ensure ethical compliance alongside clear value.

A recent publication about cautious AI adoption highlights SME tips and essential advice for enterprises. One quote from a powerful influencer sums it all up:

“Automating routine work is one thing. Redesigning how an entire function operates is another. The greater the promise, the greater the diligence required. AI isn’t just a tool you pick off the shelf. It’s an investment you’re betting your business on.” - Jess Von Bank, Global Leader, HR Digital Transformation & Technology Advisory, Mercer

Consider these words of wisdom as gospel.

For more information about responsible AI adoption read our latest ebook, no email required.

Image Credits

Feature Image: Unsplash/Priscilla Du Preez

.jpg)

.jpg)

.avif)

.avif)

.webp)

.svg)