AI interviews are driving up candidate fraud. Nearly a quarter of applicants could be fake in just a few years, as AI helps candidates cheat both AI and human interviews.

Subscribe to our Newsletter

Like what you're reading? Leave us your email, and we'll deliver to your inbox on the last Thursday of the month.

If the title of the post drew you in, the short answer is yes. Companies are using AI to conduct interviews with candidates, and candidates are utilizing AI to navigate automated interactions, which can lead to fraud. Gartner estimates that by 2028, one in four candidates moving through application processes will be completely fake, and the corporate community is trying to navigate the discussion as it unfolds.

Gartner Research Suggests Candidate Fraud is Rising

Gartner completed a Q4 2024 survey on more than three thousand candidates and found that every four out of ten were using AI during the application process. For example, more than half (54%) used AI to help them write a cover letter or a CV. Similarly, around 30% had used AI for writing samples or to generate text answers on an assessment. Now this isn’t necessarily fraud, though one senior Gartner researcher notes it makes it “harder for employers to evaluate candidates’ true abilities, and in some cases, their identities.” The researcher warns, “Candidate fraud creates cybersecurity risks that can be far more serious than making a bad hire.”

Fast forward to Q2 2025, and Gartner's survey found that out of around three thousand candidates, 6% admitted outright to proxy interview fraud (Impersonating someone or having someone impersonate them). And this is just one survey with the candidates who have actually admitted to doing this. In reality, the number of candidates participating in interview fraud must be higher.

Candidate Interview Cheating 101

After security firm KnowBe4 hired a fake IT worker who was actually a persona controlled by a North Korean actor, firms have been on high alert for candidate fraud. Aside from security threats, fraudulent candidates are costly. One survey reported that nearly a quarter of respondents had losses of $50,000 or more, while another 10% said their losses had been greater than $100,000 due to fake candidates.

Candidates have always found ways of cheating the job process; however, remote work and the rise of AI, especially deepfake technology, have made it easier. There are four main ways that candidates are cheating the application process using AI:

- AI-written cover letters and resumes are prevalent, often embellishing the candidate’s skills so that they pass initial screening

- AI-answer support during interviews, which is even easier when AI interviewers host these

- Deepfake AI tools allow candidates to mimic another person’s voice and/or appearance, which could be used for impersonation and to mask their identity

While employers are aware of the problem, they still need to rely on technology when operating at scale. Let’s look closer at how candidates are bypassing AI-agent and human interviews.

AI Passing AI Interviews

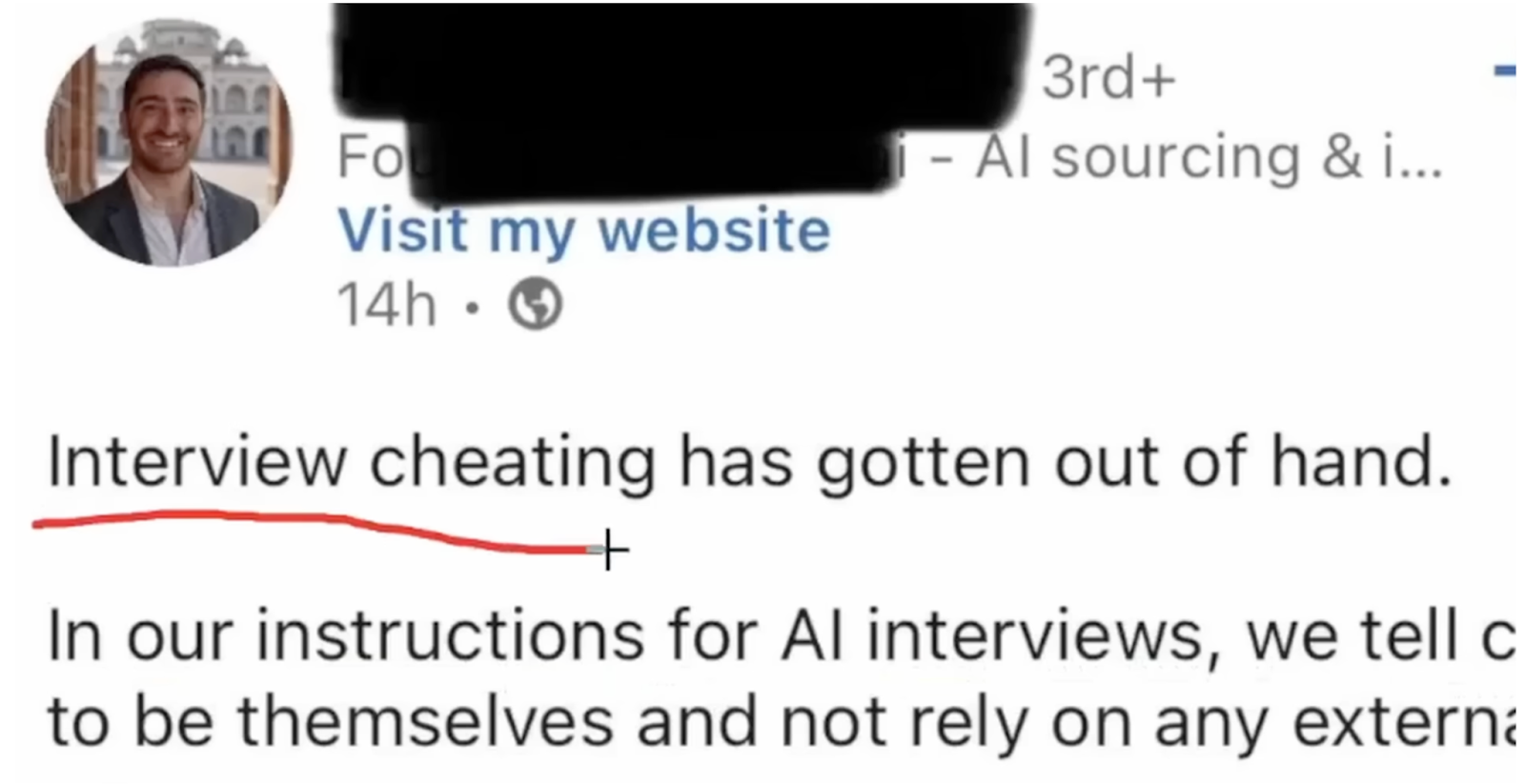

AI interview agents are on the rise, making it easier for candidates to fake their way through the interview process. The Founder of Tenzo took to LinkedIn after a candidate was caught “cheating” on every question they answered to their AI Interviewer. The Founder explained how they could see the candidate switching tabs and leveraging the copy and paste function. These comments weren’t necessarily well-received by the broader audience. Since Tenzo is an AI company, and employees would be expected to use AI tools as soon as they got the job, the Founder’s LinkedIn message came off as hypocritical.

Influencer Joshua Fluke breaks down why Tenzo’s LinkedIn post caught the attention of the Reddit community:

“We, the company, can save a whole bunch of time and money by leveraging these great, cheap AI tools to replace human labor. But you, the employee, you even try to think about saving some of your time by doing the same thing as us, that’s cheating!”

In Tenzo’s defense, while employees are typically encouraged to use AI responsibly on the job, to do so in an interview process masks the candidate's true abilities. It would also lead to homogenization if the candidates were to all just regurgitate the same ChatGPT answers. In the end, despite the AI interviewer, Tenzo did spot the cheating, and the candidate didn’t pass the interview.

Regardless, the point is that candidates are using AI to find ways to cheat their AI interviewers, and Tenzo’s post proves it. And it doesn’t help that AI interviewer tools are often built off public LLMs, where the anti-cheating code can be easily understood.

When enterprises leave the interview up to an AI agent, they have to ensure the technology behind the agent is secure and robust. When Technology Reporter, MaryLou Costa, used the Test Gorilla AI interviewer, it glitched, causing the interviewer to freeze mid-speech and then eventually crash. Even when the technology is robust, cheaters find ways around. One expert predicts that in the same way that candidates have learned to keyword stuff their CVs, they will also learn to do that with AI Interviewers.

AI Passing Human Interviews

Since AI-led interviews and virtual interviews made candidate fraud easier, as many as 72% of recruiting leaders are fighting back in 2025 with in-person interviews. The thing is, applicants are using ChatGPT and other AI engines in live interviews with humans on the other end of the screen. Here’s a real hiring manager’s anecdote of that taking place on a Zoom interview:

“Some of you may have seen the viral videos advertising web apps that will listen to an online interviewer's questions and give you generative AI answers. I am a hiring manager and recently, we had a candidate on a Zoom interview who was obviously doing exactly this. He would look into his camera when we asked the question. When the question was over he would either make thinking noises for a few seconds or he would repeat our question back to us (I guess because the microphone hadn't picked it up). Then his eyes would focus intently on another part of the screen and he would launch into answers that were simultaneously perfectly composed and oddly stilted.”

It’s important to note that this candidate was promptly rejected by the human interviewers because it was so obvious. That said, it’s not impossible that a less obvious case could fly under the radar.

Cracking Down and Cleaning Up

While completing a human-led interview with every candidate often isn’t possible in high-volume hiring scenarios, a human should be kept in the process. Chief AI Officer Simon Kriss AI explains “Ultimately, you will need a human because you can’t let AI do it end-to-end. We’ve already seen where an AI process was done totally end-to-end. They hired someone who turned out to be a complete fake person. Within one hour of being given their credentials, they had hacked internal systems and caused a data leak.”

After a rise in candidate fraud during remote interviews, the FBI issued a warning explaining how to spot deepfaked video and audio. Practitioners should be wary of the following in their AI-led or human interviews:

- Look for cases where the audio and visual are not synced

- Notice lapses in communication, especially around coughing or sneezing

- Be especially mindful when hiring for roles that have access to sensitive information, like customer or financial information

The FBI’s warning is an indicator that regulators have taken note of the growing AI interview fraud problem. Adding to this, in India, major firms are cracking down on proxy interview fraud, “handing out lifetime bans to candidates caught using stand-ins.” With regulators and enterprises trying to stay on top of the latest vulnerabilities in the AI race, hiring teams need to take all of this in and ask themselves: Can AI pass our interview?

And if the answer is yes, think about switching to a skills assessment, where hiring decisions aren’t based on who a candidate says they are, but rather what they show you they can do.

Image Credits

Feature Image: Unsplash/Yan Kolesnyk

.svg)